Fill-Mask

Contents

Fill-Mask¶

Masked language modeling is the task of masking some of the words in a sentence and predicting which words should replace those masks. These models are useful when we want to get a statistical understanding of the language in which the model is trained in. Source

Models for the fill-mask task available at hugginface

Predict the token to fill the mask¶

from transformers import pipeline

unmasker = pipeline('fill-mask', model='distilbert-base-uncased') # https://huggingface.co/distilbert-base-uncased

unmasker('The goal of life is [MASK].')

[{'score': 0.03619177266955376,

'token': 8404,

'token_str': 'happiness',

'sequence': 'the goal of life is happiness.'},

{'score': 0.030553530901670456,

'token': 7691,

'token_str': 'survival',

'sequence': 'the goal of life is survival.'},

{'score': 0.01697714626789093,

'token': 12611,

'token_str': 'salvation',

'sequence': 'the goal of life is salvation.'},

{'score': 0.01669849269092083,

'token': 4071,

'token_str': 'freedom',

'sequence': 'the goal of life is freedom.'},

{'score': 0.01526731252670288,

'token': 8499,

'token_str': 'unity',

'sequence': 'the goal of life is unity.'}]

s = 'The quick brown fox jumps over the [MASK] dog.'

res = unmasker(s)

res

[{'score': 0.07765579968690872,

'token': 25741,

'token_str': 'shaggy',

'sequence': 'the quick brown fox jumps over the shaggy dog.'},

{'score': 0.05409960821270943,

'token': 19372,

'token_str': 'barking',

'sequence': 'the quick brown fox jumps over the barking dog.'},

{'score': 0.01914549246430397,

'token': 2210,

'token_str': 'little',

'sequence': 'the quick brown fox jumps over the little dog.'},

{'score': 0.013522696681320667,

'token': 15926,

'token_str': 'stray',

'sequence': 'the quick brown fox jumps over the stray dog.'},

{'score': 0.013070916756987572,

'token': 9696,

'token_str': 'startled',

'sequence': 'the quick brown fox jumps over the startled dog.'}]

Pick a random choice of the results¶

from transformers import pipeline

import random

unmasker = pipeline('fill-mask', model='distilbert-base-uncased')

def fill_mask(inp):

# Predict token for the mask

predictions = unmasker(inp)

# Store all sequences in a list

predictions = [item['sequence'] for item in predictions]

# Return one sequence

return random.choice(predictions)

s = 'The quick brown fox jumps over the [MASK] dog.'

print(s)

print(fill_mask(s))

The quick brown fox jumps over the [MASK] dog.

the quick brown fox jumps over the startled dog.

Insert a [MASK] tag randomly¶

def insert_mask(inp, mask='[MASK]'):

from nltk import word_tokenize

import random

# Tokenize input.

words = word_tokenize(inp)

words = [word for word in words if word.isalnum()]

# Exit if words is empty

if len(words) == 0:

return inp

# Replace a random picked word with a mask

inp = inp.replace(random.choice(words), mask, 1) # 1 = replace only the 1st match.

# Return masked sentence

return inp

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

s = insert_mask(s)

print(s)

print(fill_mask(s))

The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the [MASK] fox.

the lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the yellow fox.

Apply fill-mask multiple times¶

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

print('input:', s)

for i in range(5):

s = fill_mask(insert_mask(s))

print('output:', s)

input: The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.

output: this lazy programmer jumps while the quick brown program jumps over the lazy programmer jumps over the fire fox.

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

print('input:', s)

for i in range(15):

s = fill_mask(insert_mask(s))

print('output:', s)

input: The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.

output: every fat bunny rides downhill while quick brownie jumps whilst the lazy rabbit jumps over the bunny fox.

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

print('input:', s)

for i in range(50):

s = fill_mask(insert_mask(s))

print('output:', s)

input: The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.

output: the cuckoo clock mechanism triggered by mini - rabbit strikes when a rabbit application runs alongside its own mouse.

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

print('input:', s)

for i in range(100):

s = fill_mask(insert_mask(s))

print('output:', s)

input: The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.

output: bugs r - bugs returns, mfb kes a speedy escape and goofy goofy mouse falls onto its fence.

s = 'The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.'

print('input:', s)

for i in range(500):

s = insert_mask(s)

# It can happen that no mask is included.

# If so, break the loop

if not '[MASK]' in s:

break

# Otherwise predict the fill

s = fill_mask(s)

print('output:', s)

input: The lazy programmer jumps over the quick brown fox jumps over the lazy programmer jumps over the fire fox.

output: † = ~ & g. = & + = + s. = + ~ ~ + +.

Comparison of models¶

# models is a dictionary of <name>:<mask> pairs.

models = {'distilbert-base-uncased':'[MASK]', # https://huggingface.co/distilbert-base-uncased

'distilroberta-base':'<mask>', # https://huggingface.co/distilroberta-base

'albert-base-v2':'[MASK]', # https://huggingface.co/albert-base-v2

'emilyalsentzer/Bio_ClinicalBERT':'[MASK]', # https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT

'GroNLP/hateBERT':'[MASK]' # https://huggingface.co/GroNLP/hateBERT

}

for model, mask in models.items():

print('\033[1m', model, '\033[0m', '\n')

# Create unmasker with model

unmasker = pipeline('fill-mask', model=model)

# Create input

inp = f'The goal of life is {mask}.'

# Predict mask

res = unmasker(inp)

# Print sequences

for item in res:

print(item['sequence'])

print('\n\n')

distilbert-base-uncased

the goal of life is happiness.

the goal of life is survival.

the goal of life is salvation.

the goal of life is freedom.

the goal of life is unity.

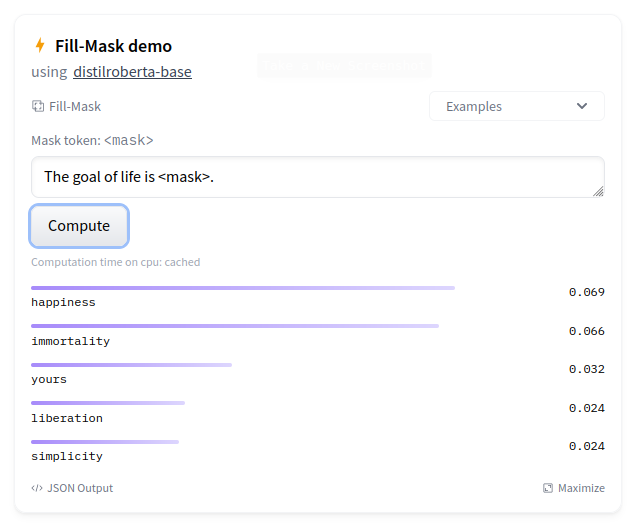

distilroberta-base

The goal of life is happiness.

The goal of life is immortality.

The goal of life is yours.

The goal of life is liberation.

The goal of life is simplicity.

albert-base-v2

the goal of life is joyah.

the goal of life is immortality.

the goal of life is happiness.

the goal of life is prosperity.

the goal of life is excellence.

emilyalsentzer/Bio_ClinicalBERT

Some weights of the model checkpoint at emilyalsentzer/Bio_ClinicalBERT were not used when initializing BertForMaskedLM: ['cls.seq_relationship.weight', 'cls.seq_relationship.bias']

- This IS expected if you are initializing BertForMaskedLM from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertForMaskedLM from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

the goal of life is comfort.

the goal of life is met.

the goal of life is care.

the goal of life is achieved.

the goal of life is below.

GroNLP/hateBERT

the goal of life is death.

the goal of life is suicide.

the goal of life is health.

the goal of life is cake.

the goal of life is life.