Crash Course into Python + Mapping Images with Python

Contents

Crash Course into Python + Mapping Images with Python¶

Variables¶

In Python you don’t have to specifiy the data type of a variable. It’s being assigned automatically and it can vary over the runtime of a program.

# integer

a = 5

print(a)

print(type(a), '\n')

# floating point number

a = 5.2

print(a)

print(type(a), '\n')

# string

a = 'some text'

print(a)

print(type(a))

5

<class 'int'>

5.2

<class 'float'>

some text

<class 'str'>

Syntax for strings (text)¶

A string is declared through quotation marks. There are some options:

print('text in single quotation marks')

text in single quotation marks

print("text in double quotation marks")

text in double quotation marks

print('''text in tripple quotation marks''')

text in tripple quotation marks

print("""text in triple (double) quotation marks""")

text in triple (double) quotation marks

# Single and double quotation marks can be used to print one of them:

print('"A quote" (Author)')

"A quote" (Author)

# Tripple quotation marks can be used to keep/ insert line breaks:

print('''Line 1

Line 2

Line 3''')

Line 1

Line 2

Line 3

Casting from one type to another¶

a = 5

print(a)

print(type(a), '\n')

a = float(a)

print(a)

print(type(a), '\n')

a = str(a)

print(a)

print(type(a), '\n')

5

<class 'int'>

5.0

<class 'float'>

5.0

<class 'str'>

Functions¶

Like a variable, a function has a name and is thus callable. When we initiate a variable, we just write the name and assign data to it, like

txt = "The quick brown fox jumps over the lazy dog's leg."

len_txt = len(txt)

When we define a function, we start with the keyword def (for definition), followed by the name of the function (which is of our choice), followed by () and a :. In the next line(s) we insert the code of the function. If the result(s) of the function should be transported to the outside, this is done with the keyword return followed by the data.

def my_function():

# code inside

result = '...' # local variable result

return result

It’s not necessary to define the type of the returned data when defining the function (in contrast to Processing/ Java).

In some languages (like Processing/ Java) the begin and end of a function is marked through { }, in Python it’s marked through indentation.

Everything inside the function is indent by one tab. As soon as a line of code is not indent anymore, it’s outside of the function. The transportation of data into the function happens through the (). We can specify variables of input data and then assign inputs to that variables:

def my_function(data):

# data is available here

# output = processed data

return output

def convert_dtypes(data):

print(data)

print(type(data), '\n')

data = float(data)

print(data)

print(type(data), '\n')

data = str(data)

print(data)

print(type(data), '\n')

# Call the function

convert_dtypes(10)

10

<class 'int'>

10.0

<class 'float'>

10.0

<class 'str'>

convert_dtypes('5.2')

5.2

<class 'str'>

5.2

<class 'float'>

5.2

<class 'str'>

It’s possible to assign default values to the variables in a definition of a function. If no arguments are given when the function is called, the default argument will be used.

def multiply_text(text='🐍', factor=5):

out = (text + ' ') * factor

return out

res = multiply_text('words and')

print(res)

res = multiply_text(factor=40)

print(res)

words and words and words and words and words and

🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍 🐍

For-Loop¶

for i in range(3):

# do something n times

print('iteration', i)

print('~ '* 7)

iteration 0

~ ~ ~ ~ ~ ~ ~

iteration 1

~ ~ ~ ~ ~ ~ ~

iteration 2

~ ~ ~ ~ ~ ~ ~

for i in range(2, 4):

print(i)

2

3

for i in range(0, 100, 10):

print(i, end=' ')

0 10 20 30 40 50 60 70 80 90

for i in range(10):

print(i*10, end=' ')

0 10 20 30 40 50 60 70 80 90

Syntax¶

A for loop is indicated by the keyword for, followed by a variable (name of your choice) and another keyword in, followed by an iterable object. Behind that we have to insert a :.

Everything inside a loop is indent by one tab. This block of code is repeated for every iteration over the iterable object.

The first line of code without that extra indent is the first line that is not part of the loop.

for i in range(5):

print('🦜' * (i+1))

print('🌵')

🦜

🦜🦜

🦜🦜🦜

🦜🦜🦜🦜

🦜🦜🦜🦜🦜

🌵

Lists¶

The syntax for a list are square brackets: [ ]. The items of the sequence are placed inside the square brackets, separated by ,.

food = ['apple', 'beer', 'cherry', 'date']

print(type(food))

print(len(food))

<class 'list'>

4

print(food[0]) # First item

print(food[-1]) # Last item

print(food[1:3]) # Slice from 1 to 3 (without 3)

print(food[:2]) # From 0 until 2 (without 2)

print(food[2:]) # From 2 (included) til end

apple

date

['beer', 'cherry']

['apple', 'beer']

['cherry', 'date']

# Adding values:

food.append('elephant')

# Removing values:

food.remove('beer')

print(food)

['apple', 'cherry', 'date', 'elephant']

# Insert a element at a specific position

food.insert(1, 'bonobo')

print(food)

['apple', 'bonobo', 'cherry', 'date', 'elephant']

# Remove and return the last element

element = food.pop()

print('removed element:', element)

print('new list:', food)

removed element: elephant

new list: ['apple', 'bonobo', 'cherry', 'date']

# Return and remove an element from a specific position

element = food.pop(2)

print('removed element:', element)

print('new list:', food)

removed element: cherry

new list: ['apple', 'bonobo', 'date']

Sorting¶

import random

random.shuffle(food)

print('Random order:', food)

# Ascending order

food.sort()

print('Ascending order:', food)

# Descending order

food.sort(reverse=True)

print('Descending order:', food)

# Sort by length

food.sort(key=len)

print('Ordered by length:', food)

Random order: ['date', 'bonobo', 'apple']

Ascending order: ['apple', 'bonobo', 'date']

Descending order: ['date', 'bonobo', 'apple']

Ordered by length: ['date', 'apple', 'bonobo']

Iterating over a list with a for-loop¶

for item in food:

print(item)

date

apple

bonobo

# The function enumerates returns a tuple of (index, value)

for index, value in enumerate(food):

print(index, value)

0 date

1 apple

2 bonobo

Data types in a list¶

One list can contain items of different data types:

num_list = [num for num in range(0, -7, -2)] # List comprehension

mixed_type_list = [0, 'some words', 3.13, -4.24e-13, num_list]

for item in mixed_type_list:

print(str(item).ljust(15), '🐍', type(item))

0 🐍 <class 'int'>

some words 🐍 <class 'str'>

3.13 🐍 <class 'float'>

-4.24e-13 🐍 <class 'float'>

[0, -2, -4, -6] 🐍 <class 'list'>

Libraries (Packages)¶

The official repository for libraries is the Python Package Index https://pypi.org/

The recommended way to install external libraries is via Pythons package installer pip, except you work in a conda environment. Then it’s recommended to first try it with condas package index.

Executing in an activated environment

# Install a package via conda

conda install <name of the package>

If that does not work, make sure to have pip installed in your activated environment

# Install pip via conda

conda install pip

# Install a package with pip

pip install <name of the package>

Working with Images¶

The standard library for dealing with images is Pillow (the sucessor of PIL).

See readthedocs.io for a tutorial.

from PIL import Image

from IPython.display import display # Library for displaying images in a Jupyter Notebook.

Load and display an image¶

path = 'data/landscape.png'

# This creates an instance of the imported class Image

img = Image.open(path)

# Display the image

display(img)

# Inspect the image

print('size:', img.size)

print('mode:', img.mode)

print('format:', img.format)

size: (750, 560)

mode: RGBA

format: PNG

Geometrical transformations¶

display(img.transpose(Image.FLIP_LEFT_RIGHT))

display(img.rotate(-10, expand=True))

Acessing individual channels¶

img = Image.open('data/landscape.png')

r, g, b, a = img.split()

img = Image.merge("RGBA", (b, r, g, a))

display(img)

Cropping¶

width, height = img.size # Extract the tuple returned by img.size into 2 variables

display(img.crop((0, height/2, width, height/2+20))) # Left, upper, right, lower pixel.

Next we’ll slice the image into pieces, store them in a list, shuffle the list and put them back into an image.

width, height = img.size

height_slice = 20

num_slices = height // height_slice # / returns a float, // returns an int

slices = [] # Empty list to hold the slices

# Iterate and append slices to the list

for i in range(num_slices):

# Crop part

part = img.crop((0, i*height_slice, width, (i+1)*height_slice)) # Left, upper, right, lower pixel.

# Append it to the list

slices.append(part)

# Shuffle list

import random

random.shuffle(slices)

# Iterate again and paste the parts into the image

new_img = img.copy()

for i, part in enumerate(slices):

new_img.paste(part, (0, i*height_slice))

# Display image

display(new_img)

Image manipulation with NumPy¶

numpy.org – “The fundamental package for scientific computing with Python”.

Tutorials:

import numpy as np

from PIL import Image

Read an image as NumPy array¶

path = 'data/landscape.png'

# Open image as Image object

img = Image.open(path)

# Read Image object as numpy array

img_a = np.asarray(img)

# Output specs of the array

print('dimensions:', img_a.ndim)

print('shape:', img_a.shape)

print('size (pixels in total):', img_a.size)

dimensions: 3

shape: (560, 750, 4)

size (pixels in total): 1680000

Sorting values¶

img = Image.fromarray(np.sort(img_a, axis=0))

display(img)

img = Image.fromarray(np.sort(img_a, axis=1))

display(img)

img = Image.fromarray(np.sort(img_a, axis=2))

display(img)

# Create a numpy array of random values

from numpy.random import default_rng

rng = default_rng()

a = rng.integers(low=0, high=255, size=18)

print(a)

[202 214 218 40 91 239 4 202 16 55 219 48 232 176 187 45 99 89]

# Reshape into an image format (3 color channels at the end)

a = a.reshape(2, 3, 3) # height, width, channels

print(a)

[[[202 214 218]

[ 40 91 239]

[ 4 202 16]]

[[ 55 219 48]

[232 176 187]

[ 45 99 89]]]

display(Image.fromarray(a.astype(np.uint8)))

# This function takes 2 arguments:

# a numpy array with an image shape

# a factor by which the image should be resized

# The function returns the resized image as PIL Image object

def resize_image_from_array(array, factor):

# Convert array dtype to integer

array = array.astype(np.uint8)

# Read as image

img = Image.fromarray(array)

# Resize image

array_height, array_width, _ = array.shape

img = img.resize((array_width*factor, array_height*factor), Image.NEAREST)

# Return image

return img

Original¶

resized_img = resize_image_from_array(a, 64)

display(resized_img)

print(a)

[[[202 214 218]

[ 40 91 239]

[ 4 202 16]]

[[ 55 219 48]

[232 176 187]

[ 45 99 89]]]

Sorted on axis=None¶

np.sort(a, axis=None)

array([ 4, 16, 40, 45, 48, 55, 89, 91, 99, 176, 187, 202, 202,

214, 218, 219, 232, 239])

a_sorted = np.sort(a, axis=None).reshape(2, 3, 3) # axis=None flattens the array, so it needs a reshape

resized_img = resize_image_from_array(a_sorted, 64)

display(resized_img)

print(a_sorted)

[[[ 4 16 40]

[ 45 48 55]

[ 89 91 99]]

[[176 187 202]

[202 214 218]

[219 232 239]]]

Sorted on axis=0¶

a_sorted = np.sort(a, axis=0)

resized_img = resize_image_from_array(a_sorted, 64)

display(resized_img)

print(a_sorted)

[[[ 55 214 48]

[ 40 91 187]

[ 4 99 16]]

[[202 219 218]

[232 176 239]

[ 45 202 89]]]

Sorted on axis=1¶

a_sorted = np.sort(a, axis=1)

resized_img = resize_image_from_array(a_sorted, 64)

display(resized_img)

print(a_sorted)

[[[ 4 91 16]

[ 40 202 218]

[202 214 239]]

[[ 45 99 48]

[ 55 176 89]

[232 219 187]]]

Sorted on axis=2¶

a_sorted = np.sort(a, axis=2)

resized_img = resize_image_from_array(a_sorted, 64)

display(resized_img)

print(a_sorted)

[[[202 214 218]

[ 40 91 239]

[ 4 16 202]]

[[ 48 55 219]

[176 187 232]

[ 45 89 99]]]

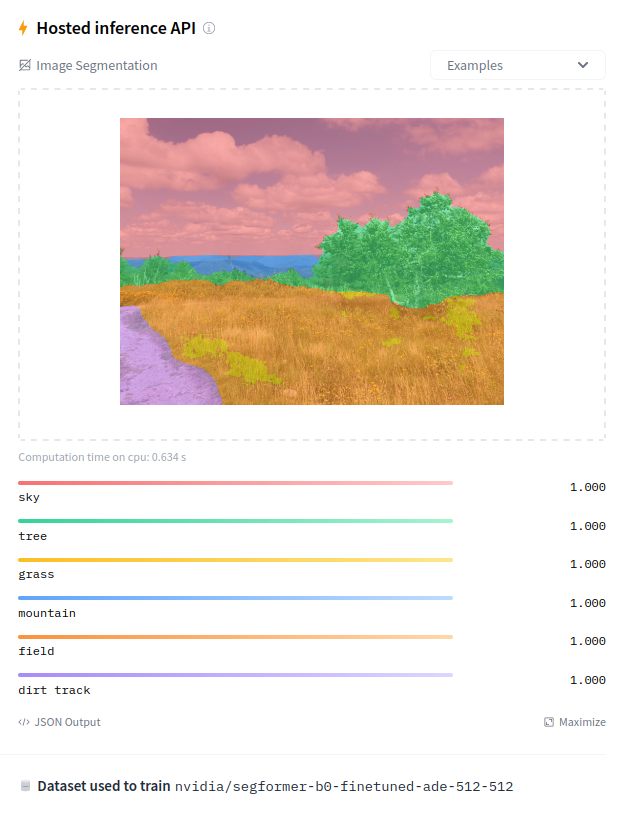

Examples using the library skimage (scikit-image)¶

From this page.

# Function to convert skimages into PIL images (for displaying them)

def skimage_to_PIL(img):

# Data is in range 0 to 1,

# map from 0 to 255

img *= 255

# Convert from float to int

img = img.astype(np.uint8)

# Create PIL Image

img = Image.fromarray(img)

return img

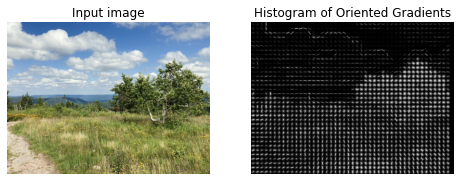

Histogram of Oriented Gradients (HOG)¶

import matplotlib.pyplot as plt

from skimage.feature import hog

from skimage import data, exposure

image = data.astronaut()

path = '../img/image_expand.png'

image = np.asarray(Image.open(path))

fd, hog_image = hog(image, orientations=8, pixels_per_cell=(16, 16),

cells_per_block=(1, 1), visualize=True, channel_axis=-1)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(8, 4), sharex=True, sharey=True)

ax1.axis('off')

ax1.imshow(image, cmap=plt.cm.gray)

ax1.set_title('Input image')

# Rescale histogram for better display

hog_image_rescaled = exposure.rescale_intensity(hog_image, in_range=(0, 10))

ax2.axis('off')

ax2.imshow(hog_image_rescaled, cmap=plt.cm.gray)

ax2.set_title('Histogram of Oriented Gradients')

plt.show()

display(skimage_to_PIL(hog_image_rescaled))

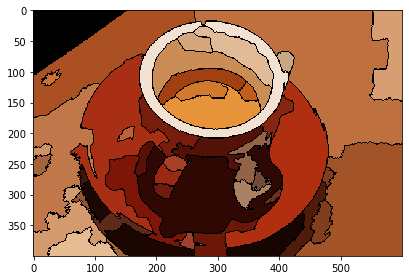

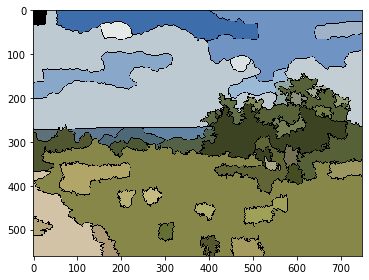

RAG Merging¶

from skimage import data, io, segmentation, color

from skimage.future import graph

import numpy as np

def _weight_mean_color(graph, src, dst, n):

"""Callback to handle merging nodes by recomputing mean color.

The method expects that the mean color of `dst` is already computed.

Parameters

----------

graph : RAG

The graph under consideration.

src, dst : int

The vertices in `graph` to be merged.

n : int

A neighbor of `src` or `dst` or both.

Returns

-------

data : dict

A dictionary with the `"weight"` attribute set as the absolute

difference of the mean color between node `dst` and `n`.

"""

diff = graph.nodes[dst]['mean color'] - graph.nodes[n]['mean color']

diff = np.linalg.norm(diff)

return {'weight': diff}

def merge_mean_color(graph, src, dst):

"""Callback called before merging two nodes of a mean color distance graph.

This method computes the mean color of `dst`.

Parameters

----------

graph : RAG

The graph under consideration.

src, dst : int

The vertices in `graph` to be merged.

"""

graph.nodes[dst]['total color'] += graph.nodes[src]['total color']

graph.nodes[dst]['pixel count'] += graph.nodes[src]['pixel count']

graph.nodes[dst]['mean color'] = (graph.nodes[dst]['total color'] /

graph.nodes[dst]['pixel count'])

img = data.coffee()

labels = segmentation.slic(img, compactness=30, n_segments=400, start_label=1)

g = graph.rag_mean_color(img, labels)

labels2 = graph.merge_hierarchical(labels, g, thresh=35, rag_copy=False,

in_place_merge=True,

merge_func=merge_mean_color,

weight_func=_weight_mean_color)

out = color.label2rgb(labels2, img, kind='avg', bg_label=0)

out = segmentation.mark_boundaries(out, labels2, (0, 0, 0))

io.imshow(out)

io.show()

img = Image.open(path)

img = img.convert('RGB')

img = np.asarray(img)

labels = segmentation.slic(img, compactness=30, n_segments=400, start_label=1)

g = graph.rag_mean_color(img, labels)

labels2 = graph.merge_hierarchical(labels, g, thresh=35, rag_copy=False,

in_place_merge=True,

merge_func=merge_mean_color,

weight_func=_weight_mean_color)

out = color.label2rgb(labels2, img, kind='avg', bg_label=0)

out = segmentation.mark_boundaries(out, labels2, (0, 0, 0))

io.imshow(out)

io.show()

display(skimage_to_PIL(out))

Image Classification with DeepFace¶

Installation:

conda activate <your_environment>

pip install deepface

from deepface import DeepFace

paths = ['data/gen_face_01.png', 'data/gen_face_02.png', 'data/gen_face_03.png']

path = paths[1]

img = Image.open(path)

display(img)

obj = DeepFace.analyze(img_path = path, actions = ['age', 'emotion'])

Action: emotion: 100%|███████████████████████| 2/2 [00:00<00:00, 3.78it/s]

obj

{'age': 32,

'region': {'x': 123, 'y': 62, 'w': 137, 'h': 137},

'emotion': {'angry': 13.24506629974045,

'disgust': 1.0598734652920643,

'fear': 76.40647151508757,

'happy': 0.0745138605301148,

'sad': 6.769444451895025,

'surprise': 1.6466912863666585,

'neutral': 0.7979447497692556},

'dominant_emotion': 'fear'}

type(obj)

dict

Draw a rect around the detected face¶

# We can access elements of a dictionary with the syntax:

# Name_of_object["key in apostrophes"]

region = obj['region']

print(region)

{'x': 123, 'y': 62, 'w': 137, 'h': 137}

from PIL import ImageDraw

img_face_region = Image.open(path)

draw = ImageDraw.Draw(img_face_region)

# Rectangle format: (left, top, right, bottom)

left = region['x']

top = region['y']

right = left + region['w']

bottom = top + region['h']

draw.rectangle((left, top, right, bottom))

display(img_face_region)

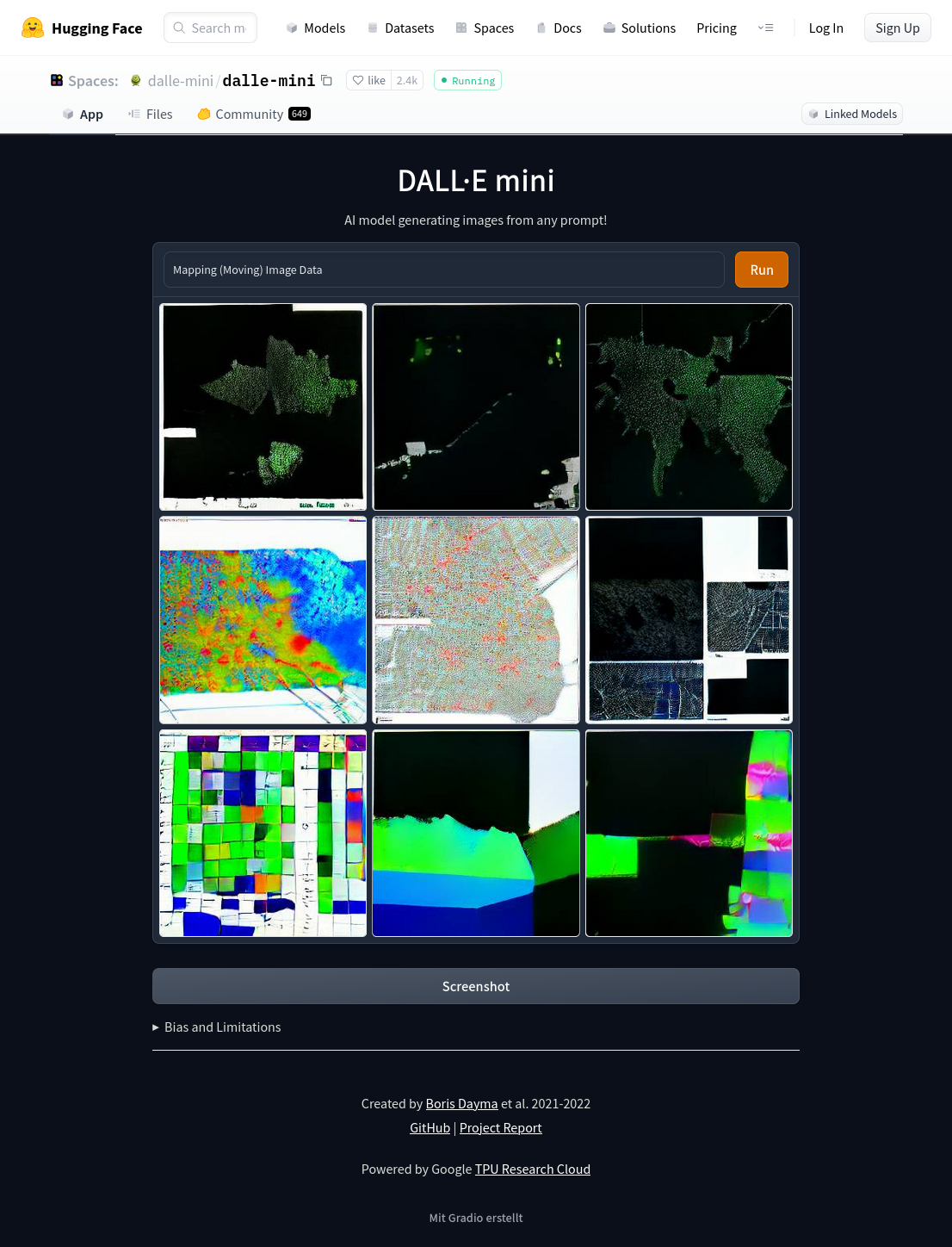

Image Classification with the Hugging Face library¶

Installation:

# For example:

conda activate <your_environment>

pip install transformers[torch]

For mac users (not working with M1)

conda activate <your environment>

pip install 'transformers[torch]'

For more options see.

Distilled Data-efficient Image Transformer (base-sized model)¶

The code below is adapted from How to use

from transformers import AutoFeatureExtractor, AutoModelForImageClassification

extractor = AutoFeatureExtractor.from_pretrained("facebook/deit-base-distilled-patch16-224")

model = AutoModelForImageClassification.from_pretrained("facebook/deit-base-distilled-patch16-224")

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

img = Image.open(requests.get(url, stream=True).raw)

display(img)

inputs = extractor(images=img, return_tensors="pt")

# forward pass

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

Predicted class: tabby, tabby cat

def img_classifier(path):

img = Image.open(path)

display(img)

inputs = extractor(images=img, return_tensors="pt")

# forward pass

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

img_classifier('data/gen_face_02.png')

Predicted class: gown

img_classifier('data/landscape.png')

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

/tmp/ipykernel_6206/2269211546.py in <module>

----> 1 img_classifier('data/landscape.png')

/tmp/ipykernel_6206/4168432019.py in img_classifier(path)

4 display(img)

5

----> 6 inputs = extractor(images=img, return_tensors="pt")

7

8 # forward pass

~/miniconda3/envs/cp/lib/python3.9/site-packages/transformers/models/deit/feature_extraction_deit.py in __call__(self, images, return_tensors, **kwargs)

152 images = [self.center_crop(image, self.crop_size) for image in images]

153 if self.do_normalize:

--> 154 images = [self.normalize(image=image, mean=self.image_mean, std=self.image_std) for image in images]

155

156 # return as BatchFeature

~/miniconda3/envs/cp/lib/python3.9/site-packages/transformers/models/deit/feature_extraction_deit.py in <listcomp>(.0)

152 images = [self.center_crop(image, self.crop_size) for image in images]

153 if self.do_normalize:

--> 154 images = [self.normalize(image=image, mean=self.image_mean, std=self.image_std) for image in images]

155

156 # return as BatchFeature

~/miniconda3/envs/cp/lib/python3.9/site-packages/transformers/image_utils.py in normalize(self, image, mean, std)

184 return (image - mean[:, None, None]) / std[:, None, None]

185 else:

--> 186 return (image - mean) / std

187

188 def resize(self, image, size, resample=PIL.Image.BILINEAR, default_to_square=True, max_size=None):

ValueError: operands could not be broadcast together with shapes (4,224,224) (3,)

# Updated classifier: convert rgba images into rgb

def img_classifier(path):

img = Image.open(path)

if img.mode == 'RGBA':

img = img.convert('RGB')

display(img)

inputs = extractor(images=img, return_tensors="pt")

# forward pass

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

img_classifier('data/landscape.png')

Predicted class: lakeside, lakeshore

BEiT (base-sized model, fine-tuned on ImageNet-22k)¶

from transformers import BeitFeatureExtractor, BeitForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = BeitFeatureExtractor.from_pretrained('microsoft/beit-base-patch16-224-pt22k-ft22k')

model = BeitForImageClassification.from_pretrained('microsoft/beit-base-patch16-224-pt22k-ft22k')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 21,841 ImageNet-22k classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

Predicted class: tabby, tabby_cat

def image_classifier_beit(path):

from transformers import BeitFeatureExtractor, BeitForImageClassification

from PIL import Image

img = Image.open(path)

if img.mode == 'RGBA':

img = img.convert('RGB')

display(img)

feature_extractor = BeitFeatureExtractor.from_pretrained('microsoft/beit-base-patch16-224-pt22k-ft22k')

model = BeitForImageClassification.from_pretrained('microsoft/beit-base-patch16-224-pt22k-ft22k')

inputs = feature_extractor(images=img, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 21,841 ImageNet-22k classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

# Return the class as well

return model.config.id2label[predicted_class_idx]

res = image_classifier_beit('data/gen_face_02.png')

Predicted class: hair

res = image_classifier_beit('data/landscape.png')

Predicted class: red_oak